FuzzySVC#

- class fsvm.FuzzySVC(*, distance_metric='centroid', centroid_metric='euclidean', membership_decay='linear', beta=0.1, balanced=True, C=1.0, kernel='rbf', degree=3, gamma='scale', coef0=0.0, shrinking=True, probability=False, tol=0.001, cache_size=200, verbose=False, max_iter=-1, decision_function_shape='ovr', break_ties=False, random_state=None)#

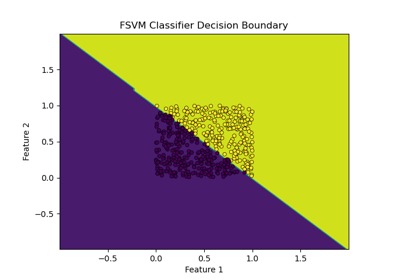

A Fuzzy Support Vector Machine classifier.

- Parameters:

- distance_metric{‘centroid’, ‘hyperplane’} or callable, default=’centroid’

Method to compute the distance of a sample to the expected value, which will be the base for calculating the membership degree. The membership decay function will be then applied to its output to compute the actual membership degree value as in [1].

If

distance_metric='centroid', the fuzzy membership is calculated based on the distance of the sample to the centroid of its class. Ifdistance_metric='hyperplane', the fuzzy membership is calculated based on the distance of the sample to the hyperplane of a pre-trained SVM classifier. If a callable is passed, it should take the input dataXand return values of a custom metric for membership base, eg. the duration since a sample was collected. Notice that the membership degree of samples with larger values ofdistance_metricvalues will be lower.- centroid_metric{‘euclidean’, ‘manhattan’}, default=’euclidean’ Metric to use for the computation of centroids of each class.

This parameter is only used when

distance_metric='centroid'.If

centroid_metric='euclidean', the centroid for the samples corresponding to each class is the arithmetic mean, which minimizes the sum of squared L1 distances. Ifcentroid_metric='manhattan', the centroid is the feature-wise median, which minimizes the sum of L1 distances.- membership_decay{‘exponential’, ‘linear’} or callable, default=’linear’

Method to compute the decay function for membership as in [1]. If a callable is passed, it should take the output of

distance_metricmethod and return the final membership degree in the interval [0, 1].- betafloat, default=0.1

Parameter for the exponential decay function, determining the steepness of the decay as in [1]._ Should be in the interval [0, 1]. This parameter is only used when

membership_decay='exponential'.- balancedbool, default=True

Whether to use the values of y to automatically adjust weights inversely proportional to class frequencies in the input data as

n_samples / (n_classes * np.bincount(y)).- Cfloat, default=1.0

Regularization parameter. The strength of the regularization is inversely proportional to C. Must be strictly positive. The penalty is a squared l2 penalty.

- kernel{‘linear’, ‘poly’, ‘rbf’, ‘sigmoid’, ‘precomputed’} or callable, default=’rbf’

Specifies the kernel type to be used in the algorithm. If none is given, ‘rbf’ will be used. If a callable is given it is used to pre-compute the kernel matrix from data matrices; that matrix should be an array of shape

(n_samples, n_samples).- degreeint, default=3

Degree of the polynomial kernel function (‘poly’). Must be non-negative. Ignored by all other kernels.

- gamma{‘scale’, ‘auto’} or float, default=’scale’

Kernel coefficient for ‘rbf’, ‘poly’ and ‘sigmoid’.

if

gamma='scale'(default) is passed then it uses 1 / (n_features * X.var()) as value of gamma,if ‘auto’, uses 1 / n_features

if float, must be non-negative.

- coef0float, default=0.0

Independent term in kernel function. It is only significant in ‘poly’ and ‘sigmoid’.

- shrinkingbool, default=True

Whether to use the shrinking heuristic.

- probabilitybool, default=False

Whether to enable probability estimates. This must be enabled prior to calling

fit, will slow down that method as it internally uses 5-fold cross-validation, andpredict_probamay be inconsistent withpredict.- tolfloat, default=1e-3

Tolerance for stopping criterion.

- cache_sizefloat, default=200

Specify the size of the kernel cache (in MB).

- verbosebool, default=False

Enable verbose output. Note that this setting takes advantage of a per-process runtime setting in libsvm that, if enabled, may not work properly in a multithreaded context.

- max_iterint, default=-1

Hard limit on iterations within solver, or -1 for no limit.

- decision_function_shape{‘ovo’, ‘ovr’}, default=’ovr’

Whether to return a one-vs-rest (‘ovr’) decision function of shape (n_samples, n_classes) as all other classifiers, or the original one-vs-one (‘ovo’) decision function of libsvm which has shape (n_samples, n_classes * (n_classes - 1) / 2). However, note that internally, one-vs-one (‘ovo’) is always used as a multi-class strategy to train models; an ovr matrix is only constructed from the ovo matrix. The parameter is ignored for binary classification.

- break_tiesbool, default=False

If true,

decision_function_shape='ovr', and number of classes > 2, predict will break ties according to the confidence values of decision_function; otherwise the first class among the tied classes is returned. Please note that breaking ties comes at a relatively high computational cost compared to a simple predict.- random_stateint, RandomState instance or None, default=None

Controls the pseudo random number generation for shuffling the data for probability estimates. Ignored when

probabilityis False. Pass an int for reproducible output across multiple function calls. See Glossary.

- Attributes:

- distance_: ndarray of shape (n_samples,)

Calculated distance of each sample to the expected value according to the

distance_metricparameter.- membership_degree_: ndarray of shape (n_samples,)

Calculated membership degree of each sample according to the their

distance_metricandmembership_decay.- class_weight_ndarray of shape (n_classes,)

Multipliers of parameter C for each class based on class imbalance.

- classes_ndarray of shape (n_classes,)

The classes labels.

- coef_ndarray of shape (n_classes * (n_classes - 1) / 2, n_features)

Weights assigned to the features (coefficients in the primal problem). This is only available in the case of a linear kernel.

coef_is a readonly property derived fromdual_coef_andsupport_vectors_.- dual_coef_ndarray of shape (n_classes -1, n_SV)

Dual coefficients of the support vector in the decision function, multiplied by their targets. For multiclass, coefficient for all 1-vs-1 classifiers. The layout of the coefficients in the multiclass case is somewhat non-trivial.

- fit_status_int

0 if correctly fitted, 1 otherwise (will raise warning)

- intercept_ndarray of shape (n_classes * (n_classes - 1) / 2,)

Constants in decision function.

- n_features_in_int

Number of features seen during fit.

- feature_names_in_ndarray of shape (

n_features_in_,) Names of features seen during fit. Defined only when

Xhas feature names that are all strings.- n_iter_ndarray of shape (n_classes * (n_classes - 1) // 2,)

Number of iterations run by the optimization routine to fit the model. The shape of this attribute depends on the number of models optimized which in turn depends on the number of classes.

- support_ndarray of shape (n_SV)

Indices of support vectors.

- support_vectors_ndarray of shape (n_SV, n_features)

Support vectors. An empty array if kernel is precomputed.

- n_support_ndarray of shape (n_classes,), dtype=int32

Number of support vectors for each class.

- probA_ndarray of shape (n_classes * (n_classes - 1) / 2)

- probB_ndarray of shape (n_classes * (n_classes - 1) / 2)

If

probability=True, it corresponds to the parameters learned in Platt scaling to produce probability estimates from decision values. Ifprobability=False, it’s an empty array. Platt scaling uses the logistic function1 / (1 + exp(decision_value * probA_ + probB_))whereprobA_andprobB_are learned from the dataset [3]. For more information on the multiclass case and training procedure see section 8 of [2].- shape_fit_tuple of int of shape (n_dimensions_of_X,)

Array dimensions of training vector

X.

References

Examples

>>> from sklearn.datasets import load_iris >>> from fsvm import FuzzySVC >>> X, y = load_iris(return_X_y=True) >>> clf = FuzzySVC().fit(X, y) >>> clf.predict(X) array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 1, 1, 1, 1, 1, 2, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 1, 2, 2, 2, 2, 2, 2, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2])

Methods

fit(X, y)Fit the FSVM model according to the given training data.

Get metadata routing of this object.

get_params([deep])Get parameters for this estimator.

predict(X)A reference implementation of a prediction for a classifier.

Compute log probabilities of possible outcomes for samples in X.

Compute probabilities of possible outcomes for samples in X.

score(X, y[, sample_weight])Return the mean accuracy on the given test data and labels.

set_params(**params)Set the parameters of this estimator.

set_score_request(*[, sample_weight])Request metadata passed to the

scoremethod.- fit(X, y)#

Fit the FSVM model according to the given training data.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features) or (n_samples, n_samples)

Training vectors, where

n_samplesis the number of samples andn_featuresis the number of features. For kernel=”precomputed”, the expected shape of X is (n_samples, n_samples).- yarray-like of shape (n_samples,)

Target values (class labels in classification, real numbers in regression).

- Returns:

- selfobject

Fitted estimator.

Notes

If X and y are not C-ordered and contiguous arrays of np.float64 and X is not a scipy.sparse.csr_matrix, X and/or y may be copied.

If X is a dense array, then the other methods will not support sparse matrices as input.

- get_metadata_routing()#

Get metadata routing of this object.

Please check User Guide on how the routing mechanism works.

- Returns:

- routingMetadataRequest

A

MetadataRequestencapsulating routing information.

- get_params(deep=True)#

Get parameters for this estimator.

- Parameters:

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns:

- paramsdict

Parameter names mapped to their values.

- predict(X)#

A reference implementation of a prediction for a classifier.

- Parameters:

- Xarray-like, shape (n_samples, n_features)

The input samples.

- Returns:

- yndarray, shape (n_samples,)

The label for each sample is the label of the closest sample seen during fit.

- predict_log_proba(X)#

Compute log probabilities of possible outcomes for samples in X.

The model need to have probability information computed at training time: fit with attribute

probabilityset to True.- Parameters:

- Xarray-like of shape (n_samples, n_features) or (n_samples_test, n_samples_train)

For kernel=”precomputed”, the expected shape of X is (n_samples_test, n_samples_train).

- Returns:

- Tndarray of shape (n_samples, n_classes)

Returns the log-probabilities of the sample for each class in the model. The columns correspond to the classes in sorted order, as they appear in the attribute classes_.

Notes

The probability model is created using cross validation, so the results can be slightly different than those obtained by predict. Also, it will produce meaningless results on very small datasets.

- predict_proba(X)#

Compute probabilities of possible outcomes for samples in X.

The model needs to have probability information computed at training time: fit with attribute

probabilityset to True.- Parameters:

- Xarray-like of shape (n_samples, n_features)

For kernel=”precomputed”, the expected shape of X is (n_samples_test, n_samples_train).

- Returns:

- Tndarray of shape (n_samples, n_classes)

Returns the probability of the sample for each class in the model. The columns correspond to the classes in sorted order, as they appear in the attribute classes_.

Notes

The probability model is created using cross validation, so the results can be slightly different than those obtained by predict. Also, it will produce meaningless results on very small datasets.

- score(X, y, sample_weight=None)#

Return the mean accuracy on the given test data and labels.

In multi-label classification, this is the subset accuracy which is a harsh metric since you require for each sample that each label set be correctly predicted.

- Parameters:

- Xarray-like of shape (n_samples, n_features)

Test samples.

- yarray-like of shape (n_samples,) or (n_samples, n_outputs)

True labels for

X.- sample_weightarray-like of shape (n_samples,), default=None

Sample weights.

- Returns:

- scorefloat

Mean accuracy of

self.predict(X)w.r.t.y.

- set_params(**params)#

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **paramsdict

Estimator parameters.

- Returns:

- selfestimator instance

Estimator instance.

- set_score_request(*, sample_weight: bool | None | str = '$UNCHANGED$') FuzzySVC#

Request metadata passed to the

scoremethod.Note that this method is only relevant if

enable_metadata_routing=True(seesklearn.set_config()). Please see User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed toscoreif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it toscore.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.Added in version 1.3.

Note

This method is only relevant if this estimator is used as a sub-estimator of a meta-estimator, e.g. used inside a

Pipeline. Otherwise it has no effect.- Parameters:

- sample_weightstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

sample_weightparameter inscore.

- Returns:

- selfobject

The updated object.